From self-driving cars to snapchat filters, many of the computing devices we use everyday are able to ‘see’ the world around them in some way. But how do they do this?

Humans see when electromagnetic waves hit the retina in the eye and are transformed into electrical signals, which then travel along the optic nerve to the brain. However, it’s what our brains do with these electrical signals that allows us to recognise objects, faces, and process real-time visual information. If we want to replicate this behaviour in machines, we need a sort of mechanical eye. That’s pretty easy, we can just use a camera… but then we need to give this digital picture to a computer and get it to ‘see’ somehow.

In 1966, professor of computer science Marvin Minsky assigned one of his students a research project to complete over the summer: to link a camera to a computer and get the computer to describe what it saw*. As it turns out, actually doing this is very complicated, and it’s something that we’ve only (sort of) worked out how to do in the last few years.

But before we get to describing images, let’s go through the basics of how a computer processes images taken by a camera.

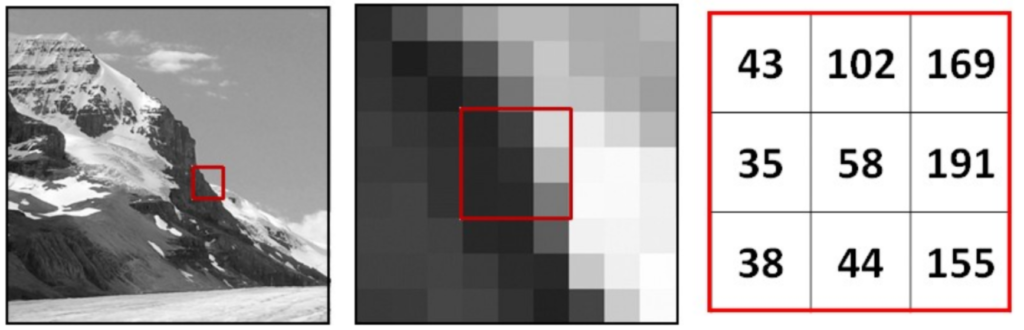

Images are recorded in a camera as pixels, each represented by a number. Luckily, computers are great with numbers! We can represent a black and white image as a sort of grid of numbers. Each number corresponds to the greyscale value of the pixel, where 0 is black, 255 is white, and the numbers in between are shades of grey.

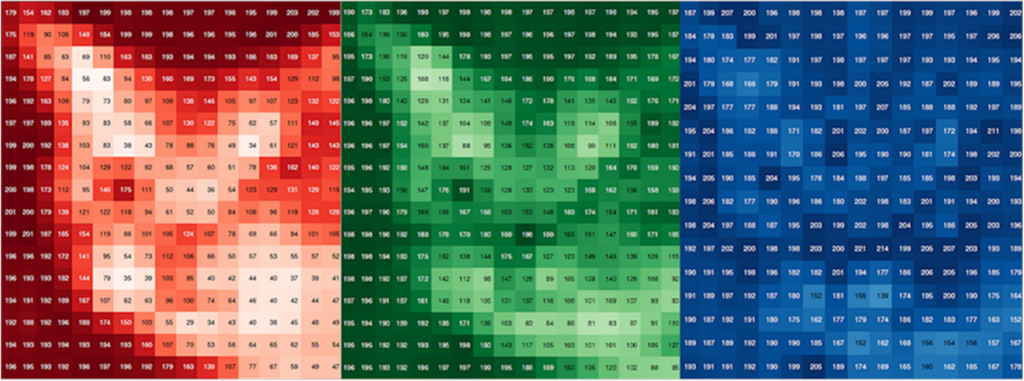

Representing coloured images is a bit more complicated, we need another set of numbers to represent the colour values. There are different ways we can do this, but the most common is RGB (red, green, blue). The ‘red channel’ is represented by another grid of numbers, where each number represents how ‘red’ that pixel is, and the same goes for the green and blue channels. Combining these three channels, we get the colours in the image, sort of like mixing different coloured paints to get the right one.

(If you want to learn more about how digital cameras create images, check out this video)

So, any image taken by a digital camera can be represented as a whole bunch of numbers, including a 2D grid for the black and white values, and then 3 more grids, representing the levels of red, green, and blue in each pixel. Altogether, these numbers are like the ‘electrical signal’ that travels from our eyes to our brains, allowing us to represent an image of the world in a way that our brains can understand.

But even if we get a computer to read all the numbers that represent an image, it can’t tell us anything about what’s in the image. We can teach the computer how to recognise what’s in an image using machine learning. This basically means we just show a bunch of images to a software program, and this program generates a mathematical function that best separates these images into the different categories we’re trying to distinguish. We’ll call this program our ‘model’. There are many different types of machine learning models that are useful for different tasks. The type of model most commonly used for classifying images is called a convolutional neural network. If you want to learn more about how these models actually work, check out this link.

Let’s say we have a collection of images where one half of the images are of cats, and the other half are of dogs. Each image is stored as the different grids of numbers we discussed earlier, and each image is labelled saying whether it contains a cat or a dog. Since both dogs and cats can be many different colours, the red, blue, and green channels won’t really be able to help us, so we will just be focusing on the black and white values.

One by one, we will show an image to our model, along with the label of whether the image is of a cat or a dog. Over time, the model will be able to identify patterns in the pixels of each image that help it to tell the difference between cats and dogs. For example, most cats have pointy ears and a long tail, and they all look fairly similar, while different breeds of dog can look very different. These are the sorts of patterns that the model will memorise.

Now, to test how well the model can identify cats and dogs, we can show it some more pictures with no label to tell it which it is. If we showed it enough examples earlier, it should have developed a pretty good strategy to identify cats and dogs. This strategy is called a mapping function, and it’s a sort of complex mathematical function that can take an input image (represented by numbers) and output the label of what is in the image (a cat or a dog).

There are a few ways that machine learning models can fail, and it all has to do with its training—that is, which examples we show the model to teach it the difference between cats and dogs. Say that the only type of dog we show the model is a Labrador. It’s not going to be able to identify other breeds of dogs with a good accuracy because they look so different.

This model only knows about cats and dogs. If we show it a picture of something else, it will have no idea what it’s looking at. It will say it is either a cat or a dog because, to the model, these are the only two things that exist. To perform more complicated computer vision tasks, we’ll need a lot more data.

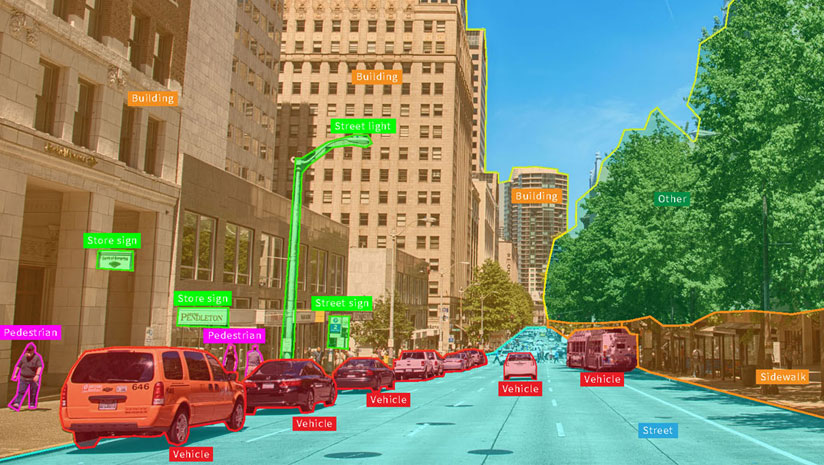

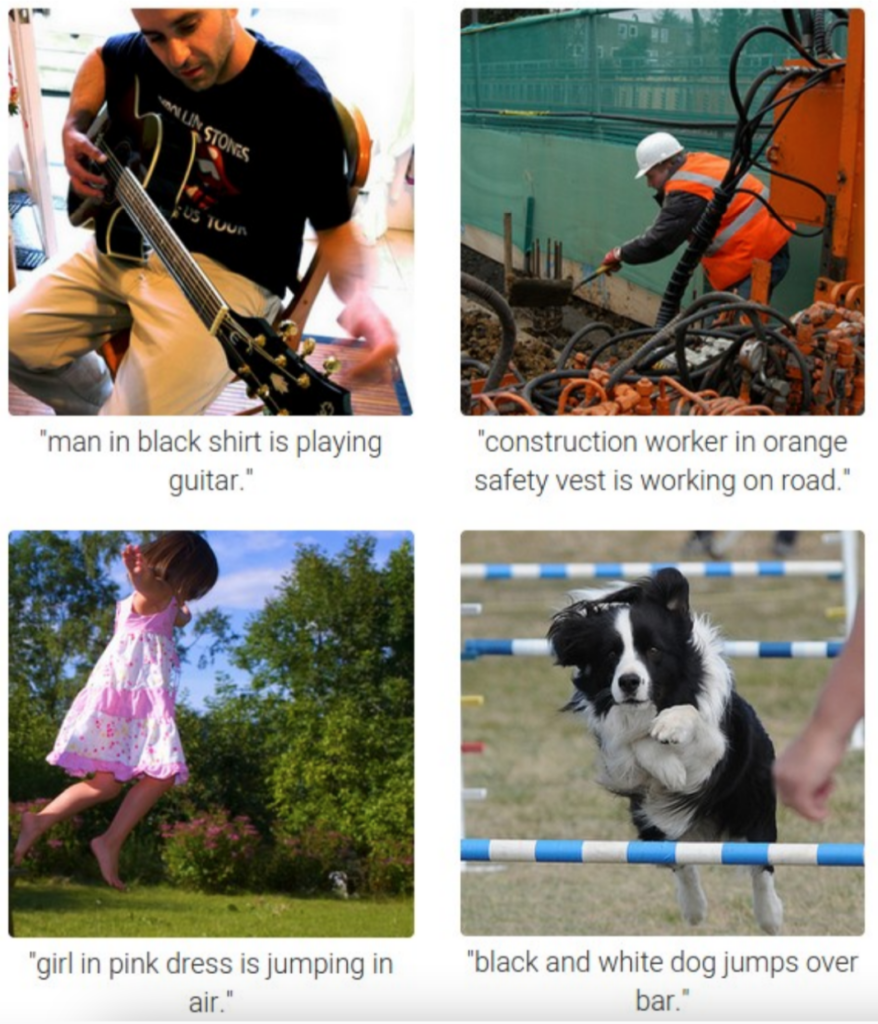

So, back to the original question given way back in 1966—how can we get a computer to describe what it sees? Building on our previous example, we could show the computer lots and lots of different images, with each part of the image labelled. That way, it could highlight and list the different objects in the image.

In more complicated examples, we can even get the model to recognise the relationships between objects.

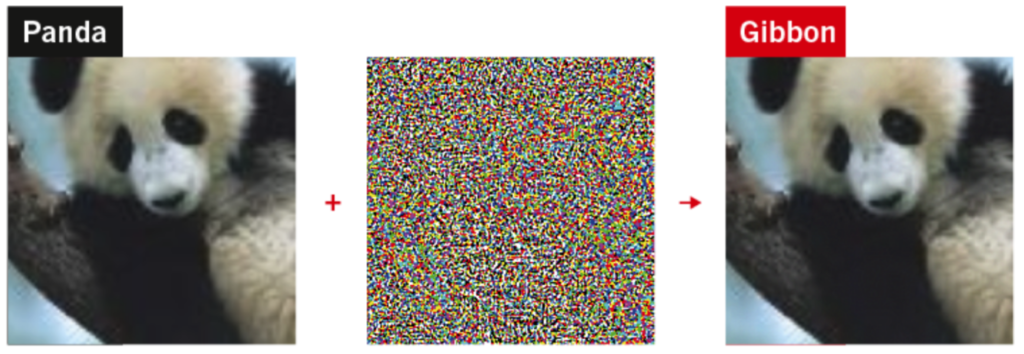

This seems pretty cool, but we don’t really understand what machine learning models are actually recognising when they identify objects in images. Sometimes, it’s clear. They are looking for certain shapes, edges, and colours. Other times, the things they are recognising aren’t even visible to the human eye. It turns out, we can slightly change the pixels of an image, so slightly that humans can’t see any difference, and it can completely confuse computers.

Another interesting example of this is in facial recognition software. As humans, we can still recognise the facial features of the people in the image, but the strange shapes and hash edges of the makeup confuse computers since they are looking for specific patterns in the pixels.

Here is an example of specially-designed glasses that cause facial recognition models to classify you as a particular person (in this example, actress Milla Jovovich).

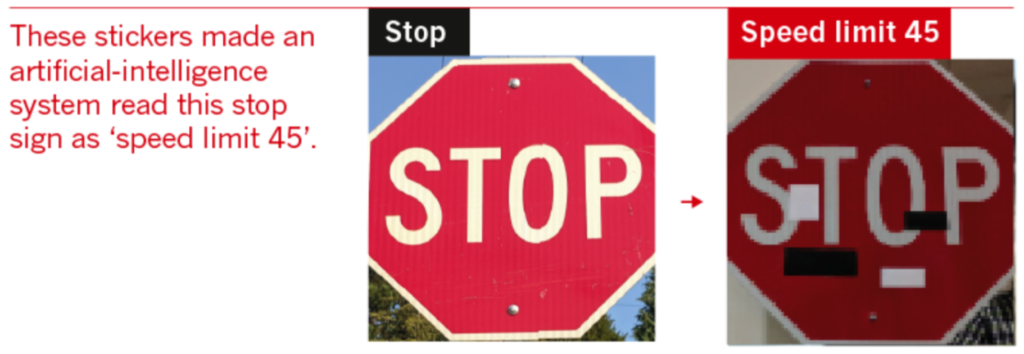

For self-driving cars, a computer getting confused could be life threatening! Look at this stop sign, which with a few carefully-placed stickers will be read as a different sign.

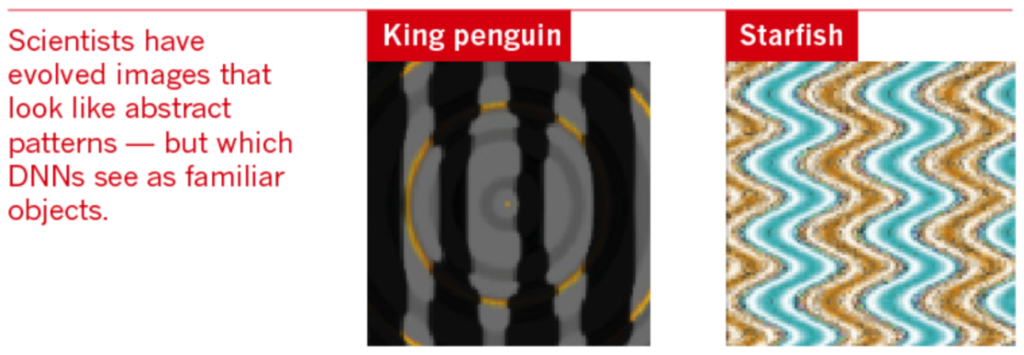

Researchers have also figured out how to generate specific patterns that will make computers think they are looking at a particular object.

So, computers can see…sort of. They don’t see like humans do, and we don’t really understand how they learn to see things, but we can still do some pretty useful things with computer vision. That said, it’s really easy to confuse computers in ways that can sometimes be funny, but have the potential to be really dangerous! There’s still a long way to go before we can say that computers have reliable vision, but research in this field is a hot topic for AI experts (and a huge focus for computer scientists at the University of Adelaide!)

*A note on the 1966 anecdote about Marvin Minsky: this is a popular anecdote that was even given by my computer vision professor last semester, but after further research it seems to just be a popular urban legend. A similar project was given around the same time by Seymour Parpet at MIT to a group of 10 students, and instead of getting the computer to describe what it saw, it was a far simpler project about image segmentation and object detection. This may have been where the rumour came from, but it is also possible that the story about Marvin Minsky is true. This was still a very ambitious project for the time and it’s a problem that wasn’t solved until recent years, but it’s likely that the real events were not quite as outlandish as the original anecdote.

About Olivia: Olivia studies Computer Science, majoring in Artificial Intelligence. In the future, she hopes to contribute to projects in AI and software engineering that make the world better! When she’s not studying you will find her painting (digitally), writing (sci-fi!), or watching Doctor Who.